Sci-fi eye

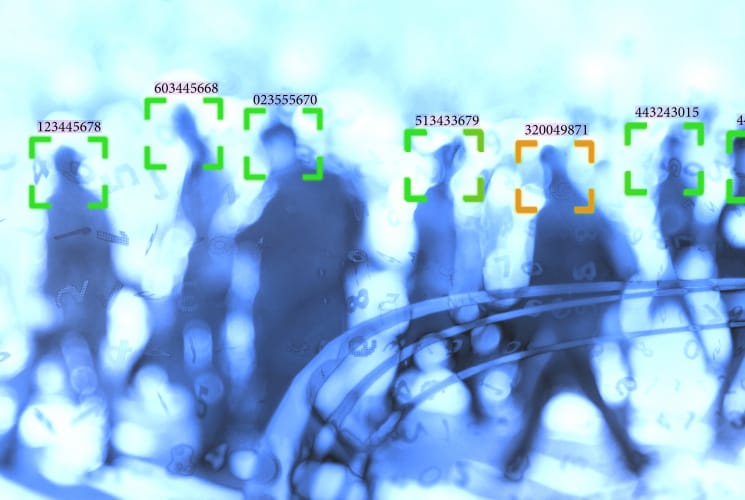

If you’re scared now, just wait until you see the future of face recognition technology, writes Gareth L Powell

If you’re scared now, just wait until you see the future of face recognition technology, writes Gareth L Powell

October’s issue of The Engineer featured an article discussing the controversy surrounding trials of live facial recognition (LFR) technology by South Wales Police and the Metropolitan Police.

Put simply, LFR technology enables a computer connected to a surveillance system to recognise individuals in real-time, which could allow those individuals to be tracked and behavioural profiles compiled. But while this kind of state scrutiny might set off alarm bells in those of us who have read 1984, there are implications to this technology that not even George Orwell could have foreseen.

As a science fiction writer, my job is to look not at how people will be using a piece of technology tomorrow, but to imagine what they might be doing with in it ten, fifty, even a hundred years from now.

So, what might the world look like by the end of the next decade if we assume that we’ll have LFR technology that’s sophisticated enough not to be easily fooled by sunglasses or beards, and cheap enough to be accessed by most organisations and even some individuals?

_____________________________________________________________________________

Further reading

- Facial recognition to be trialled at Bristol and Dublin airports

- 3D facial recognition project gets funding boost

_____________________________________________________________________________

Perhaps your employer will require you to spend a certain amount of time at the gym each week in order to retain your health benefits. They will also know which shops you frequent and probably what you buy there, as well as how many times a week you visit a bar.

There are implications to this technology that not even George Orwell could have foreseen

Investigators will be able to track the movements of cheating spouses. Self-driving taxis will recognise you and allow you to choose a destination from your ‘favourites’ list, while playing you a selection of music from your online playlists. Smart panels on the walls of shopping centres, offices and hotels will change not only the advertisements they display for you, but also the entire décor of the space you’re in.

But what happens if this technology gets abused? I’m not talking about a kind of Big Brother state panopticon, as that appears inevitable. Rather, I’m talking about more unscrupulous adaptations.

What if neighbourhoods and shopping malls refuse entry to known or suspected offenders? What if airlines deny service to customers they consider ‘high risk’ based on their ethnicity?

Journalists and stalkers could easily track the movements of celebrities using drones able to scan and recognise faces. On a more disturbing note, this technology could be used for assassinations, allowing a drone carrying a few grams of high explosive to select and pursue a target.

Of course, the deployment of LFR technology will lead to the development of countermeasures. The most basic of these might be as simple as a large parka hood maybe paired with mirrored sunglasses or contact lenses that change the colour of the eyes, while more sophisticated methods could employ dazzle camouflage, using make-up to create optical illusions on the face, obscuring its exact proportions, or patterns that present as interference when viewed through a camera. One can also picture backstreet surgeons altering a person’s facial appearance in order to supply them with a new identity in a world where your face is your passport.

With the rise of deep-fake technology, it’s also not unreasonable to imagine the

ability for members of the public to 3-D print masks in the exact likeness of celebrities or politicians, in order to obscure their own movements or frame those individuals for crimes or infidelities. If identity theft in 2019 is a problem, imagine how much worse it would be if a criminal stole your face and used it to access your bank account, cast fraudulent votes, and maybe even place you at the scene of a murder.

Looking further into the future, we might consider the development of a self-aware artificial intelligence. What might such a creature do if it recognised the technicians who were coming to shut it down? Perhaps it might use some of the methods mentioned above to track down and eliminate those opposed to its existence, or those involved in competing projects. It might even seek out and kill its designers in order to ensure it remains one of a kind, unchallenged in its digital existence.

Gareth L Powell is a science-fiction novelist born in Bristol and educated in Wales. His novels include the Ack-Ack Macaque trilogy, and the Embers of War space opera trilogy.

Deep Heat: The new technologies taking geothermal energy to the next level

I imagine the power output of a geothermal system is limited by the heat flux through the ground. It should be possible to oversize the thermal and...