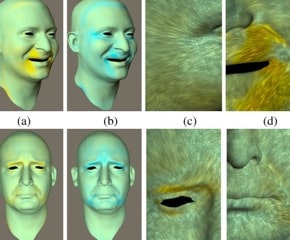

The engineers created the realistic virtual characters by capturing the details of the skin at resolution levels of approximately ten microns.

The technology pictures the microscopic geometry of patches of facial skin, in states of stretch and compression, which is then analysed and compared to neutral uncompressed skin. These findings then enabled the researchers to produce a model of how skin changes through facial expressions at the microscopic level.

Modern scanning technology can currently capture how skin moves at the mid-scale resolutions of less than a millimetre using motion capture. However, the dynamics of skin at the microstructure level, below a tenth of a millimetre, cannot be directly captured. Facial skin and folds have never been captured in great detail around areas of the eyes, nose and mouth. Consequently, gaming characters are less lifelike.

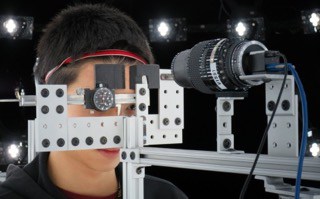

The process of capturing the intricate facial details is done by a number of measurements which consists of certain elements including a camera with a macrophotography lens that enables the researchers to take high resolution images of a patch of facial skin, and a specialised LED sphere to illuminate the skin patch with polarised spherical gradient illumination. This is a form of controlled lighting that enables the researchers to illuminate the subject under four different lighting conditions so that facial skin texture, shape and reflectance can be measured.

The device also contains a skin measurement gantry that consists of callipers that either compress or stretch patches of the subject’s facial skin, which is recorded by the camera macro lens. The information is relayed to a computer, which builds a computerised map of the face on a microscopic level.

The research was led at Imperial by Dr Abhijeet Ghosh from the Department of Computing.

In a statement, Dr Ghosh said: “Digital faces are becoming increasingly realistic when their facial expressions are static. However, the challenge going forward is to develop realistic faces when they are animated, which would heighten the movie and gaming experience for users. Our work takes us one step closer to that goal.”

The engineers are further hoping to develop the technology so that it can be applied in visual effects and games for improving realism in digital characters.

Deep Heat: The new technologies taking geothermal energy to the next level

No. Not in the UK. The one location in the UK, with the prospect of delivering heat at around 150°C and a thermal-to-electrical efficiency of 10-12%,...